Humane, the startup founded by ex-Apple employees Imran Chaudhri and Bethany Bongiorno, has given a first live demo of its new device; a wearable gadget with a projected display and AI-powered features intended to act as a personal assistant.

[ad_1]

Chaudhri, who serves as Humane’s chairman and president, demoed the device onstage during a TED talk, a recording of which has been acquired by Inverse and others ahead of its expected public release on April 22nd.

“It’s a new kind of wearable device and platform that’s built entirely from the ground up for artificial intelligence,” Chaudri says in comments transcribed by Inverse. “And it’s completely standalone. You don’t need a smartphone or any other device to pair with it.”

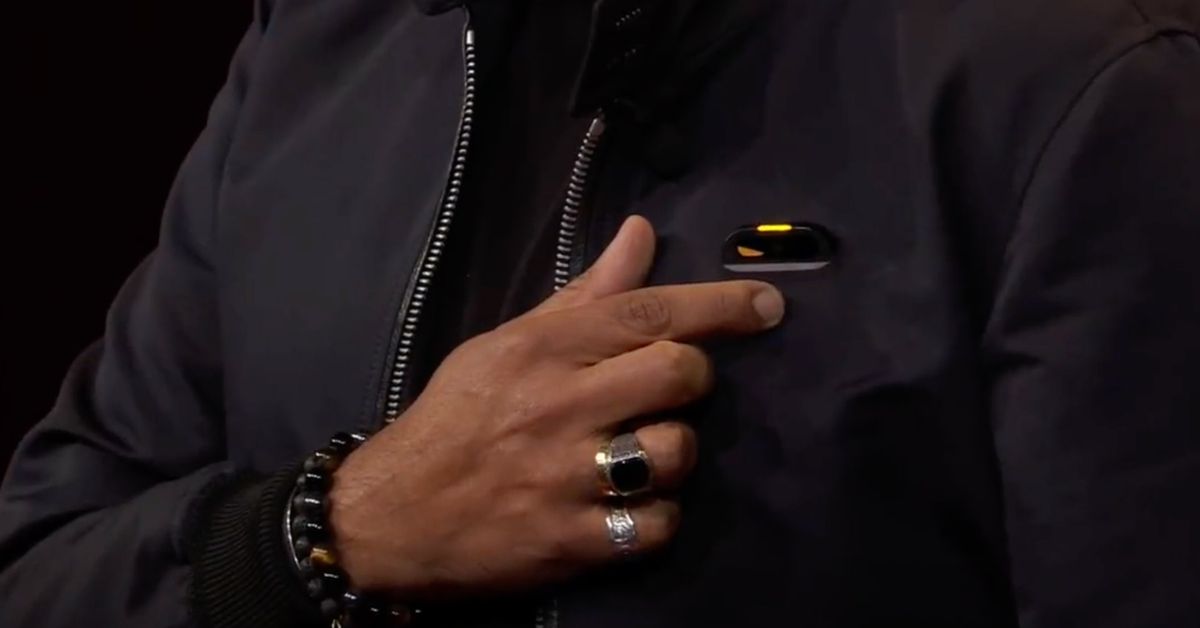

Thanks to the presentation, we now have at least some idea of what the device might be able to do, and how it might go about doing it without a traditional touchscreen interface. During the presentation, Chaudhri wears the device in his breast pocket, tapping it in lieu of a wake word, and then issuing voice commands like you would with an Amazon Echo smart speaker. Axios notes that the device also supports gesture commands.

“Imagine this, you’ve been in meetings all day and you just want a summary of what you’ve missed,” Chaudri says, before tapping the device and asking to be caught up. In response, the device offers summary of “emails, calendar invites, and messages.” It’s unclear exactly where the wearable is pulling this information from given Chaudri’s comments about not needing a paired smartphone, so presumably it’s connected to cloud-based services.

In addition to spoken responses, the device is also able to project a screen onto nearby surfaces. At one point in the presentation, Chaudri receives a phone call from Bethany Bongiorno (Humane co-founder, CEO, and Chaudri’s wife), which the device projects onto his hand. The camera angle obscures how Chaudri picks up the call, and at no point does he seem to interact with the projected screen on his hand, despite the interface showing what look like buttons. But, he’s able to hold the call as though using a phone on speakerphone.

As well as being able to project a screen, the device also includes a camera that’s shown identifying objects in the world around it, similar to what we saw teased in a leaked investor pitch deck. Onstage, Chaudri uses the camera to identify a chocolate bar and advise him whether or not to eat it based on his dietary requirements.

Finally, there’s a translation demonstration, where Chaudri holds down a button on the device, says a sentence, and then waits as Humane’s wearable reads out the same sentence in French. In the clip, Chaudri never instructs the device to translate his words, so it’s not clear how one activates this functionality.

“We like to say that the experience is screenless, seamless, and sensing, allowing you to access the power of compute while remaining present in your surroundings, fixing a balance that’s felt out of place for some time now,” Chaudhri says, per Inverse.

Humane is far from the first company to have attempted to have attempted to offer these kinds of features, but it’s notable that it’s attempting to do it all in a relatively compact, screenless device that doesn’t require a paired smartphone. But what’s unclear to me is how usable the device will be when you’re in public or in a hurry. For all their faults, smartphones are still great at getting you quick access to the details you need, and showing them on a screen that only you can see, and it’s not clear whether Humane’s combination of a projected screen and speakers is capable of matching it just yet.

[ad_2]