[ad_1]

On Google I/O keynote day, the search and internet advertising provider puts forth a rapid-fire stream of announcements during its developer conference, including many unveilings of recent things it’s been working on.

Since we know you don’t always have time to watch a two-hour presentation, the TechCrunch team took that on and delivered story after story on new products and features. Here, we give you quick hits of the biggest news from the keynote as they were announced, all in an easy-to-digest, easy-to-skim list. Here we go:

Google Maps

Image Credits: Google

Google Maps unveiled a new “Immersive View for Routes” feature in select cities. The new feature brings all of the information that a user may need into one place, including details about traffic simulations, bike lanes, complex intersections, parking and more. Read more.

Magic Editor and Magic Compose

We’re always wanting to change something about the photo we just took, and now Google’s Magic Editor feature is AI-powered for more complex edits in specific parts of the photos, for example the foreground or background and can also fill in gaps in the photo or even reposition the subject for a better-framed shot. Check it out.

There is also a new feature called Magic Compose, demoed today, that shows it being used with messages and conversations to rewrite texts in different styles. “For example, the feature could make the message sound more positive or more professional, or you could just have fun with it and make the message sound like it was “written by your favorite playwright,” aka Shakespeare,” Sarah writes. Read more.

PaLM 2

Image Credits: Google

Frederic has your look at PaLM 2, Google’s newest large language model (LLM). He writes “PaLM 2 will power Google’s updated Bard chat tool, the company’s competitor to OpenAI’s ChatGPT, and function as the foundation model for most of the new AI features the company is announcing today.” PaLM 2 also now features improved support for writing and debugging code. More here. Also, Kyle takes a deeper dive into PaLM 2 with a more critical look at the model through the lens of a Google-authored research paper.

Bard gets smarter

Good news: Google is not only removing its waitlist for Bard and making its available, in English, in over 180 countries and territories, but it’s also launching support for Japanese and Korean with a goal of supporting 40 languages in the near future. Also new is Bard’s ability to surface images in its responses. Find out more. In addition, Google is partnering with Adobe for some art generation capabilities via Bard. Kyle writes that “Bard users will be able to generate images via Firefly and then modify them using Express. Within Bard, users will be able to choose from templates, fonts and stock images as well as other assets from the Express library.”

Workspace

Image Credits: TechCrunch

Google’s Workspace suite is also getting the AI touch to make it smarter, with the addition of an automatic table (but not formula) generation in Sheets and image creation in Slides and Meet. Initially, the automatic table is simpler, though Frederic notes there is more to come with regard to using AI to create formulas. The new features for Slides and Meet include the ability to type in what kind of visualization you are looking for, and the AI will create that image. Specifically for Google Meet, that means custom backgrounds. Check out more.

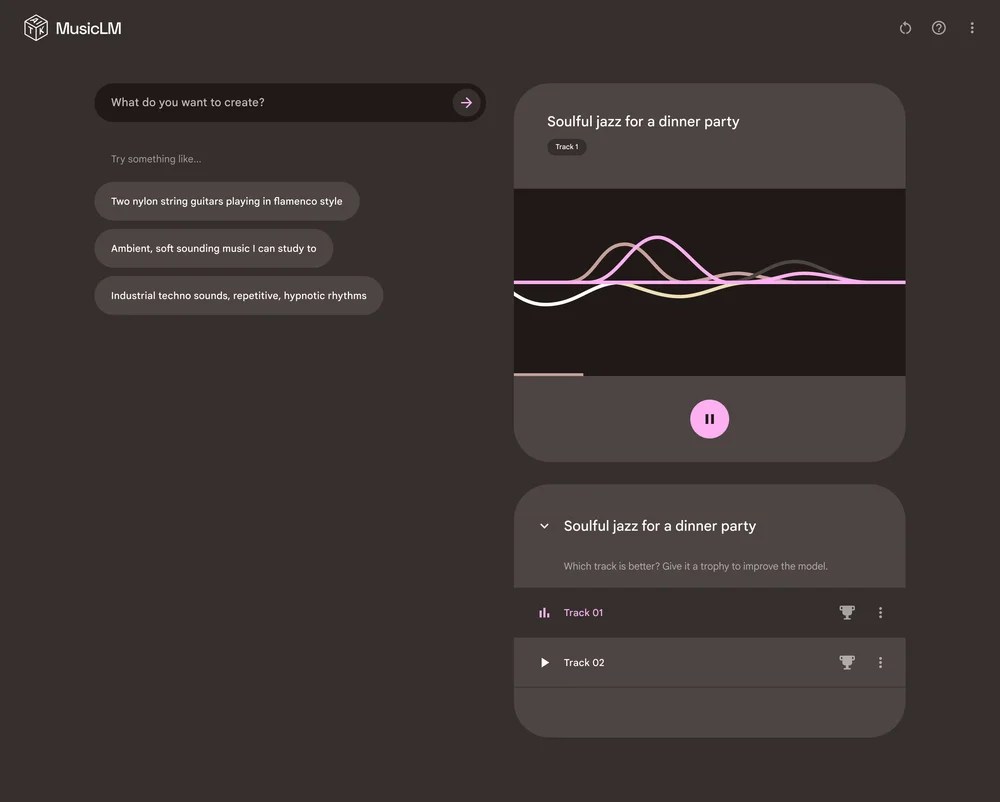

MusicLM

Image Credits: Google

MusicLM is Google’s new experimental AI tool that turns text into music. Kyle writes that for example, if you are hosting a dinner party, you can simply type, “soulful jazz for a dinner party” and have the tool create several versions of the song. Read more.

Search

Google Search has two new features surrounding better understanding of content and the context of an image the user is viewing in the search results. Sarah reports that this includes more information with an “About this Image” feature and new markup in the file itself that will allow images to be labeled as “AI-generated.” Both of these are extensions of work already going on, but is meant to provide more transparency on if the “image is credible or AI-generated,” albeit not an end-all-be-all of addressing the larger problem of AI image misinformation.

Aisha has more on Search, including that Google is experimenting with an AI-powered conversational mode and described the experience as, “users will see suggested next steps when conducting a search and display an AI-powered snapshot of key information to consider, with links to dig deeper. When you tap on a suggested next step, Search takes you to a new conversational mode, where you can ask Google more about the topic you’re exploring. Context will be carried over from question to question.”

There was also the introduction of a new “Perspectives” filter that we will soon see at the top of some Search results when the results “would benefit from others’ experiences,” according to Google. For example, posts on discussion boards, Q&A sites and social media platforms, including those with video. Think having an easier time finding Reddit links or YouTube videos, Sarah writes.

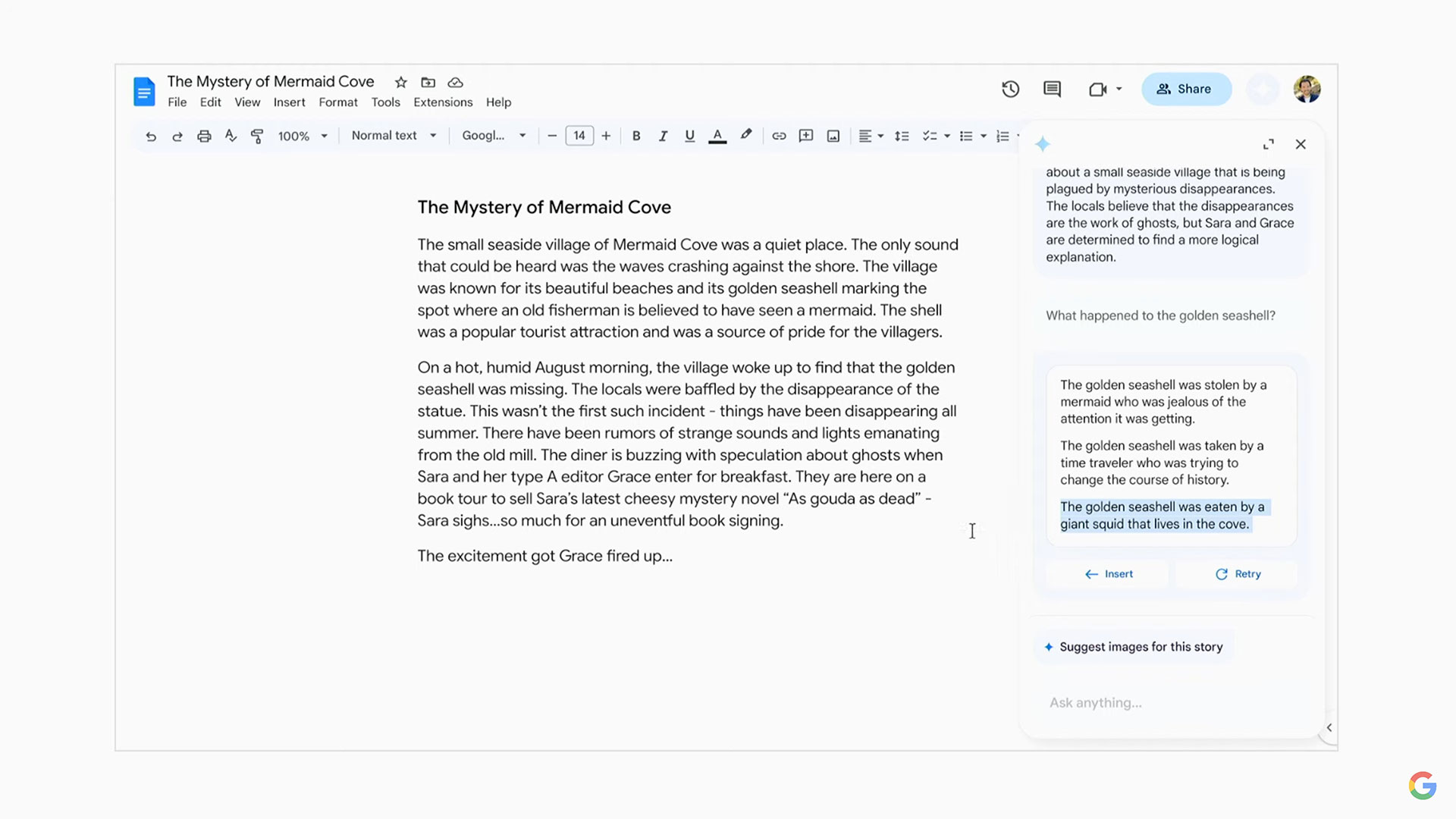

Sidekick

Image Credits: Google

Darrell has your look at a new tool unveiled today called Sidekick, writing that it is designed “to help provide better prompts, potentially usurping the one thing people are supposed to be able to do best in the whole generative AI loop.” The Sidekick panel will live in a side panel in Google Docs and is “constantly engaged in reading and processing your entire document as you write, providing contextual suggestions that refer specifically to what you’re written.”

Codey

We like the name of Google’s new code completion and code generation tool, Codey. It’s part of a number of AI-centric coding tools being launched today and is also Google’s answer to GitHub’s Copilot, a chat tool used for asking questions about coding. Codey is specifically trained to handle coding-related prompts and is also trained to handle queries related to Google Cloud in general. Read more.

Google Cloud

There’s a new A3 supercomputer virtual machine in town. Ron writes that “this A3 has been purpose-built to handle the considerable demands of these resource-hungry use cases,” noting that A3 is “armed with NVIDIA’s H100 GPUs and combining that with a specialized data center to derive immense computational power with high throughput and low latency, all at what they suggest is a more reasonable price point than you would typically pay for such a package.”

Imagen in Vertex

Google also announced new AI models heading to Vertex AI, its fully managed AI service, including a text-to-image model called Imagen. Kyle writes that Imagen was previewed via Google’s AI Test Kitchen app last November. It can generate and edit images as well as write captions for existing images.

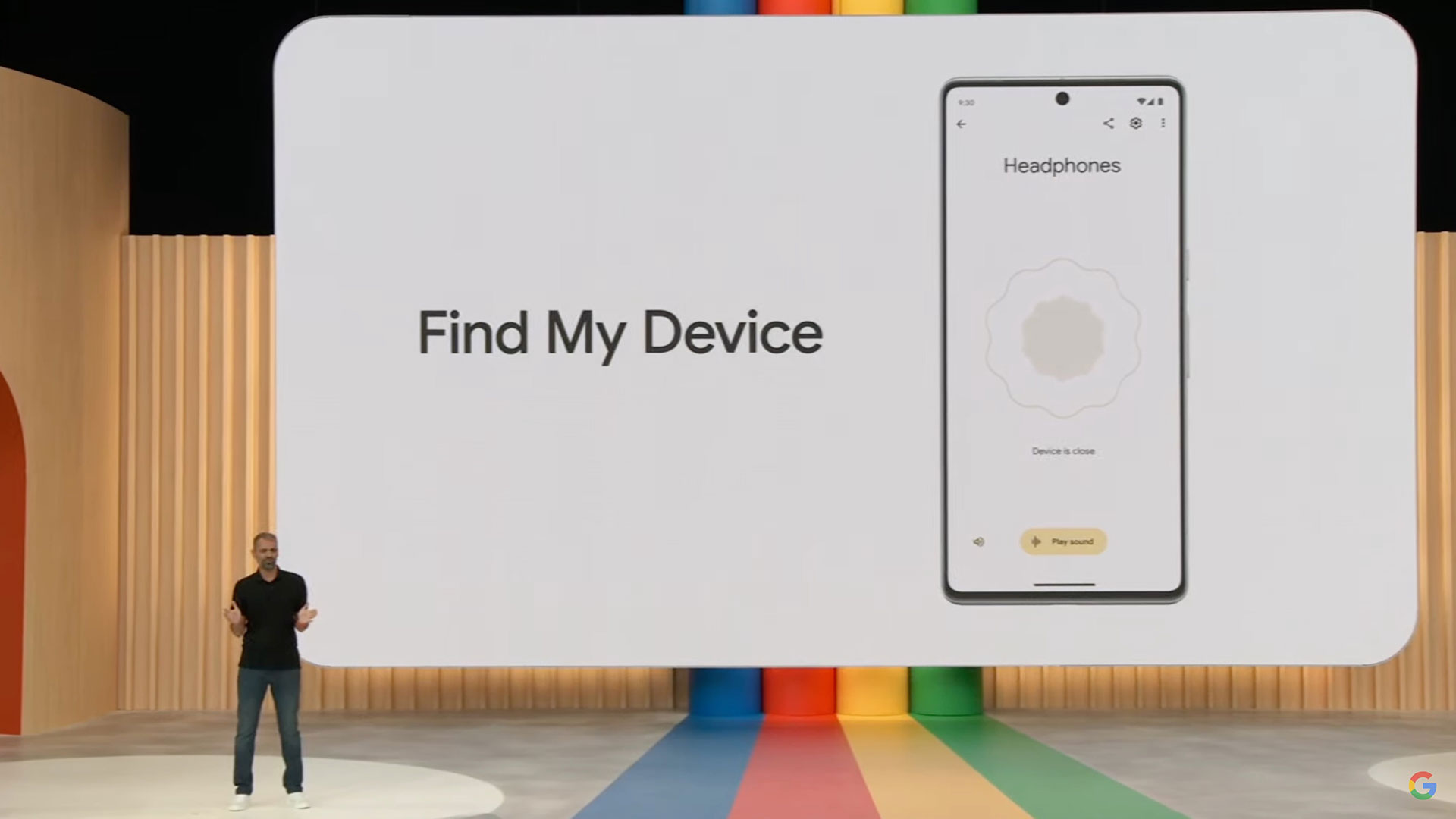

Find My Device

Image Credits: TechCrunch

Piggy-backing on Apple and Google teaming up on Bluetooth tracker safety measures and a new specification, Google introduced its own series of improvements to its own Find My Device network, including proactive alerts about unknown trackers traveling with you with support for Apple’s AirTag and others. Some of the new features will include notifying users if their phone detects an unknown tracker moving with them, but also connectivity with other Bluetooth trackers. Google’s goal with the upgrades is the “offer increased safety and security for their own respective user bases by making these alerts work across platforms in the same way — meaning, for example, the work Apple did to make AirTags safer following reports they were being used for stalking would also make its way to Android devices,” Sarah writes.

Pixel 7a

Image Credits: Google

Google’s Pixel 7a goes on sale May 11 at $100 less than the Pixel 7 ($499). Like the Pixel 6a, it has the 6.1-inch screen versus the 6.4-inch Pixel 7. It also launched in India. When it comes to the camera, it has a slightly higher pixel density, but Brian said “I really miss the flexibility and zoom of the 7 Pro, but I was able to grab some nice shots around my neighborhood with the 7a’s cameras.” Its new chip does enable features like Face Unblur and Super Res Zoom. Find the full breakdown here.

Project Tailwind

The names sounds more like an undercover government assignment, but to Google, Project Tailwind is an AI-powered notebook tool it is building with the aim of taking a user’s freeform notes and automatically organizing and summarizing them. The tool is available through Labs, Google’s refreshed hub for experimental products. Here’s how it works: users pick files from Google Drive, then Project Tailwind creates a private AI model with expertise in that information, along with a personalized interface designed to help sift through the notes and docs. Check it out.

Generative AI wallpapers

Now that you got that new Pixel 7a in your hand, you have to make it pretty! Google will roll out generative AI wallpapers this fall that will enable Android users to answer suggested prompts to describe your vision. The feature will use Google’s text-to-image diffusion models to generate new and original wallpapers, and the color palette of your Android system will automatically match the wallpaper you’ve selected. More here.

Wear OS 4

Google debuted the next version of its smartwatch operating system, Wear OS 4. Here’s what you’ll notice: improved battery life and functionality and new accessibility features, like text-to-speech. Developers also have some new tools to build new Wear OS watch faces and publish them to Google Play. Watch for Wear OS 4 to launch later this year. Read more. Also, there are other fun new apps and things coming for smartwatches, including improvements to its suite of offers, like Gmail, Calendar, etc., but also updates from WhatsApp, Peloton and Spotify.

Universal Translator

Image Credits: Google

Also unveiled today is that Google is testing a powerful new translation service that puts video into a new language while also synchronizing the speaker’s lips with words they never spoke. Called “Universal Translator,” it was shown as “an example of something only recently made possible by advances in AI, but simultaneously presenting serious risks that have to be reckoned with from the start,” Devin writes. Here’s how it works: the “experimental” service takes an input video, in this case a lecture from an online course originally recorded in English, transcribes the speech, translates it, regenerates the speech (matching style and tone) in that language, and then edits the video so that the speaker’s lips more closely match the new audio. More on this.

Pixel Tablet

Image Credits: Google

You knew it was coming, and we can confirm that the Pixel Tablet is finally here. While Brian thought the interface looked like a “giant Nest Home Hub,” he did like the dock and the design.

And since tablets are used primarily at home, Brian notes that the Pixel Tablet is “not just a tablet — it’s a smart home controller/hub, a teleconferencing device and a video streaming machine. It’s not going to replace your television, but it’s certainly a solid choice to watch some YouTube.” Check out more here.

Pixel Fold

Image Credits: Google

One of the big announcements that already dropped, covered by Brian, is that Google used May 4 (aka “May the Fourth Be With You” Day) to unveil it will have a foldable Pixel phone. In a new story, Brian does a deep dive into the phone, which he writes Google has been working on for five years.

He also notes that “the real secret sauce in the Pixel Fold experience is, unsurprisingly, the software…The app continuity when switching between the external and internal screens is quite seamless, allowing you to pick where you left off as you change screen sizes. Naturally, Google has optimized its most popular third-party apps for the big screen experience, including Gmail and YouTube.” Read more here.

Firebase

Firebase, Google’s backend-as-a-service platform for application developers, has some new features, including the addition of AI extensions powered by Google’s PaLM API and opening up the Firebase extension marketplace to more developers.

Google’s Play Store gets some AI love

Sarah and Frederic teamed up to report on new ways developers can use Google’s AI to build and optimize their Android apps for the Play Store alongside a host of other tools to grow their app’s audience through things like automated translations and other promotional efforts.

New features and updates include:

- Writing Play Store listings: Using Google’s PaLM 2 model, “All the developers have to do is fill out a few prompts (audience, key theme, etc.) and the system will generate a draft, which they can then edit to their heart’s content.”

- Summary of app reviews: “For now, though, that’s only available in English and only for positive reviews.”

- Store Listing Groups: Working in tandem with the custom store listings that Google kicked off last year, Store Listing Groups are created by customizing the base listing and overriding specific elements.

- Promotional Content: “The Play Store will also now incorporate in-app events in new places, including through Play Store notifications, the For You section on the Apps and Games tabs on the Play Store app, within Play Store search results, beneath the search results for a specific app by its title, and even on the Search screen itself, before you type in a query, above other recommendations.”

Developers also have new security features

In addition to the Play Store getting AI updates, Sarah writes that developers also now have new security and privacy features to play around with. Some of these include a new beta version of the Play Console app and changes to the Data safety section on the Play Store.

Meanwhile, Play Store users can receive prompts to update their apps that developers can push out or get automatically when their app is crashing. More here.

Health Connect

Image Credits: Google

Health Connect, Google’s platform for storing health data, may be in Beta currently, but Aisha writes that you can start to see it be a part of Android and mobile devices with the release of Android 14 later this year.

Some fun features coming include new exercise routes so you can share maps of where you run, an easier way to log menstrual cycles and sharing controls. In addition, MyFitnessPal now has an integration with Health Connect, giving users with Type 1 and Type 2 diabetes access to glucose data within the MyFitnessPal app. Read more.

More developer tools

- Android Studio is getting an AI infusion with Android Studio Hedgehog, a new conversational experience to help developers write code and fix bugs and answer more general coding questions. Check it out.

- ML Hub is a new one-stop destination for developers who want to get more guidance on how to train and deploy their ML models, no matter whether they are in the early stages of their AI career or seasoned professionals. It comes with a toolkit of common use cases that Google intends to regularly update and add to in the future. More here.

- There are also new functionality to design Wear OS watch faces.

- Over 1 million published Flutter-based apps have been published. The open-source multi-platform application framework will see some changes that include easier integration of Flutter components into existing web apps.

Project Starline

Image Credits: Google

Google unveiled the latest prototype of Project Starline, its 3D teleconferencing video booth. Brian notes the biggest changes include less hardware, for example, less cameras, and more leaning on AI and ML to generate the three-dimensional images. More here.

Connected car experience

- Rebecca and Kirsten teamed up to bring you all of the automotive news from today’s conference, so let’s dive in:

- First is a round-up of new features and services for cars, including video conferencing, gaming and YouTube. They write that “the company has been making inroads into automotive via two paths: Android Auto, an app that runs on the user’s phone and wirelessly communicates with and projects navigation, parking, media and messaging to the vehicle’s infotainment system, and Google built-in, which is powered by its Android Automotive operating system and integrates Google services directly into the vehicle. Android Automotive OS is modeled after its open-source mobile operating system that runs on Linux. But instead of running smartphones and tablets, Google modified it so automakers could use it in their cars.”

- Updates to Android Auto that includes conferencing from your favorite names, like Cisco, Zoom and Microsoft.

- Adding YouTube to more cars, starting with Polestar.

[ad_2]